Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 | 31 |

Tags

- 개발자스터디

- til

- 국비

- 개인공부

- java

- 개발자블로그

- 운영체제

- Python

- 컴퓨터개론

- 프로그래머스

- 스파르타내일배움캠프

- 부트캠프

- 코딩테스트

- Flutter

- 자바

- 내일배움캠프

- 스파르타코딩클럽

- 중심사회

- 99일지

- 99클럽

- AWS

- wil

- 백준

- 스파르타내일배움캠프WIL

- Spring

- MySQL

- 컴퓨터구조론 5판

- 스파르타내일배움캠프TIL

- 항해

- 소프트웨어

Archives

- Today

- Total

컴공생의 발자취

[인공지능] 모델 구성 코드 및 분석(1) 본문

728x90

반응형

모델 구성표

| 입력층 | Conv1 | Batch Normalization |

Max Pooling |

Conv2 | Batch Normalization |

Max Pool ing |

Conv3 | Batch Normalization |

Max Pooling |

Flatten | FC | Drop out |

FC |

| 32x32 | 3 x32 x32 |

3x3x 64 |

3x3x128 | 64 | 0.25 | 10 |

* padding = ’valid‘, strides = (1,1), activation = 'relu’

* 최적화 함수 = Adam, 학습율 = 0.001

전체 코드

import tensorflow as tf

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

# MNIST 데이터셋을 로드하여 준비. 샘플 값을 정수에서 부동소수로 변환

(train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.cifar10.load_data()

class_name = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truk']

import matplotlib.pylab as plt

plt.figure(figsize=(6,1))

for i in range(36):

plt.subplot(3, 12, i+1)

plt.imshow(train_images[i], cmap="brg")

plt.axis("off")

plt.show()

train_images = train_images.reshape((50000, 32, 32, 3))

test_images = test_images.reshape((10000, 32, 32, 3))

train_images, test_images = train_images/255.0, test_images/255.0

from keras.utils import to_categorical

# 층을 차례대로 쌓아 tf.keras.Sequential 모델을 만든다..

# 훈련에 사용할 옵티마이저(optimizer)와 손실 함수를 선택:

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Conv2D(32, (3, 3), activation='relu', padding='valid', strides=(1,1), input_shape=(32, 32, 3)))

model.add(tf.keras.layers.BatchNormalization())

# model.add(tf.keras.layers.Dropout(0.25)) # BatchNormalization과 비교

model.add(tf.keras.layers.MaxPooling2D((2, 2)))

model.add(tf.keras.layers.Conv2D(64, (3, 3), activation='relu'))

model.add(tf.keras.layers.BatchNormalization())

# model.add(tf.keras.layers.Dropout(0.25)) # BatchNormalization과 비교

model.add(tf.keras.layers.MaxPooling2D((2, 2)))

model.add(tf.keras.layers.Conv2D(128, (3, 3), activation='relu'))

model.add(tf.keras.layers.BatchNormalization())

# model.add(tf.keras.layers.Dropout(0.25)) # BatchNormalization과 비교

model.add(tf.keras.layers.MaxPooling2D((2, 2)))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(64, activation='relu'))

model.add(tf.keras.layers.Dropout(0.25))

model.add(tf.keras.layers.Dense(10, activation='softmax'))

model.compile(optimizer=tf.optimizers.Adam(learning_rate=0.001), loss='sparse_categorical_crossentropy', metrics=['accuracy'])

model.summary()

history = model.fit(train_images, train_labels, epochs=5, batch_size=32)

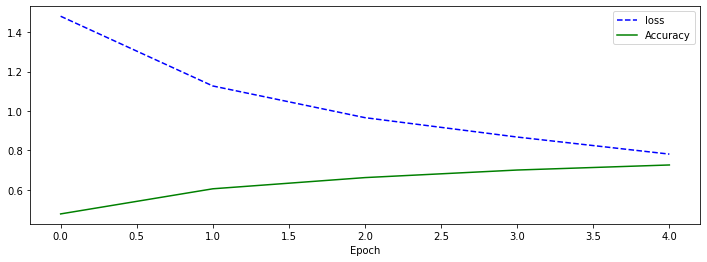

plt.figure(figsize=(12,4)) # 그래프의 가로세로 비율

plt.subplot(1,1,1) # 1행1열의 첫 번째 위치

plt.plot(history.history['loss'], 'b--', label='loss') # loss는 파란색 점선

plt.plot(history.history['accuracy'], 'g-', label='Accuracy') # accuracy는 녹색실선

plt.xlabel('Epoch')

plt.legend()

plt.show()

print('최적화 완료!')

print('\n=====test result=====')

labels=model.predict(test_images)

# verbose=2로 설정하여 진행 막대(progress bar)가 나오지 않도록 설정한다.

print('\n Accuracy: %.4f' % (model.evaluate(test_images, test_labels, verbose=2)[1]))

# [0]: loss, [1]: accuracy

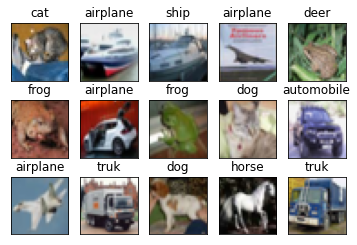

fig=plt.figure()

for i in range(15):

subplot=fig.add_subplot(3, 5, i+1)

subplot.set_xticks([])

subplot.set_yticks([])

subplot.set_title('%s' % class_name[np.argmax(labels[i])])

subplot.imshow(test_images[i].reshape((32, 32, 3)), cmap=plt.cm.brg)

plt.show()

print('\n=====test result=====')

labels=model.predict(test_images)

# verbose=2로 설정하여 진행 막대(progress bar)가 나오지 않도록 설정한다.

print('\n Accuracy: %.4f' % (model.evaluate(test_images, test_labels, verbose=2)[1]))

# [0]: loss, [1]: accuracy

전체 결과

Downloading data from https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

170498071/170498071 [==============================] - 4s 0us/step

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 30, 30, 32) 896

batch_normalization (BatchN (None, 30, 30, 32) 128

ormalization)

max_pooling2d (MaxPooling2D (None, 15, 15, 32) 0

)

conv2d_1 (Conv2D) (None, 13, 13, 64) 18496

batch_normalization_1 (Batc (None, 13, 13, 64) 256

hNormalization)

max_pooling2d_1 (MaxPooling (None, 6, 6, 64) 0

2D)

conv2d_2 (Conv2D) (None, 4, 4, 128) 73856

batch_normalization_2 (Batc (None, 4, 4, 128) 512

hNormalization)

max_pooling2d_2 (MaxPooling (None, 2, 2, 128) 0

2D)

flatten (Flatten) (None, 512) 0

dense (Dense) (None, 64) 32832

dropout (Dropout) (None, 64) 0

dense_1 (Dense) (None, 10) 650

=================================================================

Total params: 127,626

Trainable params: 127,178

Non-trainable params: 448

_________________________________________________________________

Epoch 1/5

1563/1563 [==============================] - 17s 4ms/step - loss: 1.4809 - accuracy: 0.4772

Epoch 2/5

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1268 - accuracy: 0.6047

Epoch 3/5

1563/1563 [==============================] - 7s 4ms/step - loss: 0.9659 - accuracy: 0.6616

Epoch 4/5

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8675 - accuracy: 0.7000

Epoch 5/5

1563/1563 [==============================] - 7s 4ms/step - loss: 0.7808 - accuracy: 0.7257

최적화 완료!

=====test result=====

313/313 [==============================] - 1s 2ms/step

313/313 - 1s - loss: 0.8938 - accuracy: 0.6941 - 911ms/epoch - 3ms/step

Accuracy: 0.6941

728x90

반응형

'🧠 AI' 카테고리의 다른 글

| [인공지능] 모델 구성 코드 및 분석(2) (0) | 2022.11.29 |

|---|